Why you want individuals to handle AI content material that means

What is your data strategy?

Your first thought might be about first-party data collection, performance metrics, or metadata for targeted content. But that’s not what I’m getting at.

Instead, I ask: What is your strategy for shaping thought leadership, creating stories, building your brand message, and providing sales materials that engage and persuade?

“Wait,” you might say. “Isn’t that a content strategy? »

Well, yes. But also, no.

Is it content or data?

Generative AI blurs the lines between content and data.

When you think about your articles, podcasts, and videos, you probably don’t think of them as “data.” But AI providers are doing it.

AI Providers don’t talk about learning their patterns “engaging content” or “well-crafted stories”. Instead, they talk about accessing and processing “data” (text, images, audio and video). AI vendors commonly use the term “training data” as a means of rigor to refer to the datasets they rely on for model development and training.

This perspective is not wrong: it is rooted in the history of search engines, where patterns and frequency determined relevance, and search engine “indexes” were just big buckets of files and unstructured text (i.e. data).

No one ever claimed that search engines understood the meaning of every conceivable type of content in their giant bucket. Reducing it to “data” seemed appropriate.

But AI companies are now attributing understanding and intuition to this data. They claim to have all this information and the ability to rearrange it and intuitively find the best answer.

But let’s be clear: AI doesn’t understand. This predicts.

It generates the most likely next word or image – structured information devoid of intent or meaning. Meaning is – and always will be – a human construct resulting from the intentionality behind communication.

Fighting for meaning

This difference underlies the growing tension between content creators and AI providers.

AI providers say the Internet is a vast repository of publicly available data – as available to machines as it is to humans – and that their tools help provide deeper meaning.

Content creators claim that people learn from content imbued with intention, but AI just steals the products and rearranges them without regard for the original meaning.

Interestingly, the conflict arises over something that both agree on: it is the machine that determines meaning.

But that’s not the case.

The Internet makes data (content) available to AI, but only humans can make sense of it.

This makes the distinction between content and data more crucial than ever.

What’s the difference?

A recent study found that consumers are less positive word of mouth and loyalty when they believe the emotional content was created by AI rather than a human.

Interestingly, this study did not focus on whether participants could detect if content was generated by AI. Instead, the same content was presented to two groups: one was told that it had been created by a human (the control group), while the other was told that it had been generated by AI.

Study Conclusion: “Companies should carefully consider whether and how to disclose AI-created communications. »

Spoiler alert: no one will.

In another studyHowever, the researchers tested whether people could distinguish between AI-generated and human-generated content. Participants only correctly identified AI-generated text 53% of the time – barely better than a random guess, which would achieve 50% accuracy.

Spoiler alert: no, we can’t.

We are programmed to be wrong

In 2008, science historian Michael Shermer coined the word “patternicity.” In his book The Believing Brain, he defines the term as “the tendency to find meaningful patterns in noise that is both meaningful and meaningless.”

He stated that humans tend to “give meaning, intention and action to these patterns”, calling this phenomenon “agency.”

So, as humans, we are programmed to make two types of mistakes:

Type 1 errorswhere we see the false positive – we see a pattern that doesn’t exist.

Type 2 errorswhere we see the false negative – we are missing a pattern that exists.

When it comes to generative AI, people are likely to make both types of mistakes.

AI vendors and people’s tendency to anthropomorphize technology prepare people for Type 1 errors. This is why solutions are marketed as “co-pilot”, “assistant”, “researcher” or “creative partner” .

A data-driven content mindset leads marketers to look for models of success that may not exist. They risk confusing quick first drafts with agile content without considering whether the drafts offer real value or differentiation.

AI-generated “strategies” and “research” seem credible simply because they are clearly written (and vendors claim the technology taps into deeper insights than people possess).

Many people equate these quick responses with accuracy, unaware that the system is just regurgitating what it has absorbed – truthful or not.

And here’s the irony: our awareness of these risks could lead us to type 2 errors and prevent us from realizing the benefits of generative AI tools. We might not see patterns that actually exist. For example, if we simply believe that AI always produces average or “not quite true” content, we will fail to see the pattern that shows how effective AI is at solving business problems. complex treatment.

As technology improves, risk settles with “good enough” – both in ourselves and in the tools we use.

Recent CMI research highlights this trend. In the 2025 Career Outlook for Content and Marketing Study, The most commonly cited use of AI by marketers is “brainstorming new topics.” However, the next five most common answers – each cited by more than 30% of respondents – focused on tasks such as summarizing content, writing drafts, optimizing posts, writing e-mails. emails and content creation for social networks.

But the CMIs B2B content marketing benchmarks, budgets and trends research reveals growing hesitancy when it comes to AI. Thirty-five percent of marketers cite accuracy as their top concern when it comes to generative AI.

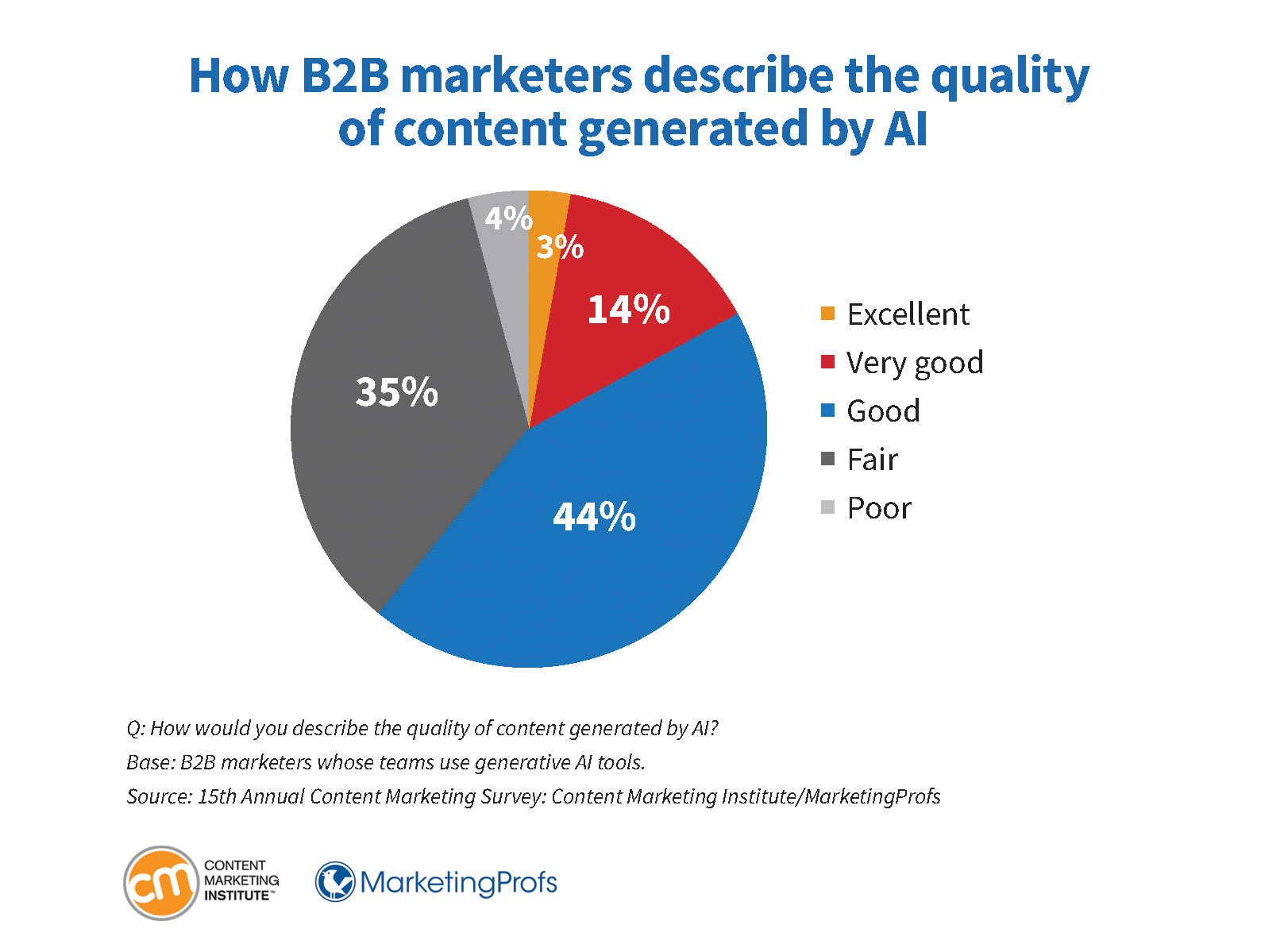

While most respondents report only an “average” level of trust in the technology, 61% still rate the quality of AI-generated content as excellent (3%), very good (14%), or good (44%). %). 35% consider it fair and 4% consider it mediocre.

We therefore use these tools to produce content that we consider satisfactory, but we unsure of its accuracy and have only moderate confidence in the results.

This approach to generative AI shows that marketers tend to use it to produce transactional content at scale. Instead of delivering on the promise that AI will “unleash our creativity,” marketers risk settling for the option to opt out.

Look for better questions rather than faster answers

The essence of modern marketing lies partly in data, partly in content – and in deeply understanding and making sense of it for our customers. It’s about discovering their dreams, their fears, their aspirations and their desires – the invisible threads that guide them forward.

To paraphrase my marketing hero, Philip Kotler, modern marketing is not just about sharing minds or hearts. It is about spiritual sharing, something that transcends narrow personal interests.

So how can we, modern marketers, balance all of these things and deepen the meaning of our communications?

First, recognize that the content people create today becomes the data set that will define us tomorrow. Regardless of how it is generated, our content will have inherent biases and varying degrees of value.

For AI-generated content to provide value beyond the data you already have, move beyond the idea of using the technology simply to increase the speed or scale of creating words, images, content, etc. audio and video.

Instead, embrace it as a tool to improve the ongoing process of extracting meaningful insights and fostering deeper relationships with our customers.

If generative AI is to become more effective over time, it requires more than just technological refinement: it requires people to develop. People need to become more creative, more empathetic and wiser to ensure that technology and the people who use it do not turn into something meaningless.

Our teams will we need more roles, not fewer which can extract valuable insights from AI-generated content and transform them into meaningful insights.

The people who fill these positions will not necessarily be journalists or designers. But they will have the skills to ask thoughtful questions, interact with customers and influencers, and transform raw information into meaningful insights through listening, conversation, and synthesis.

The qualities required are similar to those of artists, journalists, talented researchers or subject matter experts. Perhaps this could even be the next evolution of the role of the influencer.

There is still a long way to go.

One thing is clear: If generative AI is to be more than a distracting novelty, businesses need a new role – that of sense manager – to guide how AI-based ideas turn into real value.

It’s your story. Say it well.

Subscribe to daily or weekly CMI emails to receive rose-colored glasses in your inbox every week.

HANDPICKED RELATED CONTENT:

Cover image by Joseph Kalinowski/Content Marketing Institute